Content Coin: Hype, Hope, or a Second Spring for the Creator Economy?

Background: Recently, Base founder Jesse and Solana founder Toly engaged in a heated debate on the "value of content tokens." The Zora platform mints every piece of user-posted social content into a token, which is freely tradable on the Base chain, sparking widespread discussion. The core point of contention is that Jesse believes content tokens represent different content values with their intrinsic logic, while Toly questions the lack of a value verification mechanism (such as explicit rights like ad revenue share). Jesse later acknowledged that it is currently a value game process aimed at rewarding creators. Another key reason driving this discussion is the significant recent surge in the price of Zora platform tokens. So the question arises: Does content coin have any "fundamentals"? Is this fundamental based on the content value of creators, or is it based on the prosperity of the Base and other public chain ecosystems themselves? Will the content economy usher in a "second spring" as a result?

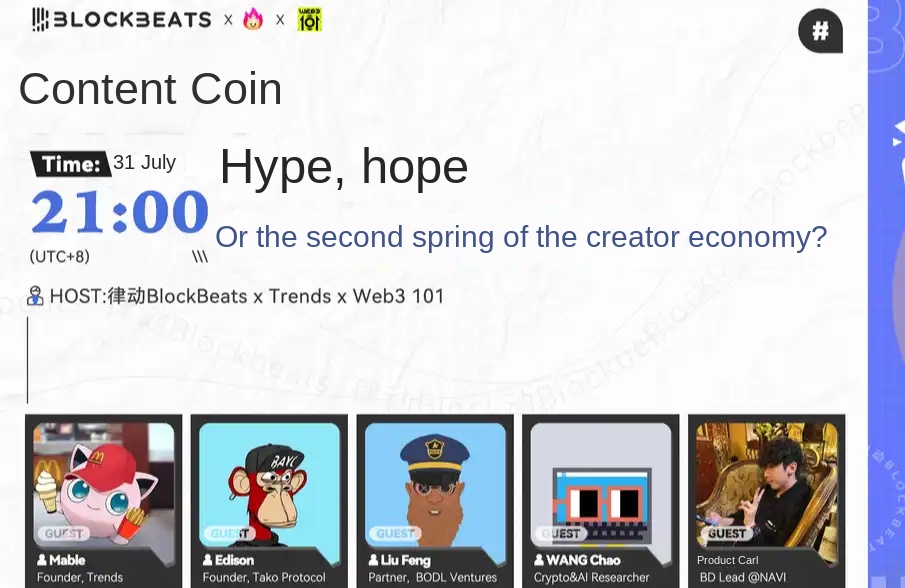

On the evening of July 31st, BlockBeats invited Trends founder Mable, Tako Protocol founder Edison, BODL Ventures partner Liu Feng, Crypto and AI researcher WANG Chao, and NAVI Protocol Business Manager Carl to discuss the development and trends of content tokens and creator economies with the theme "Content Token: Hype, Hope, or a Second Spring for Creator Economies?"

BlockBeats: Welcome everyone to today's Space, and thank you to all the guests for joining us. Today, our focus is on "content tokens," a recent hot topic that has sparked widespread attention and discussion within the crypto community. First, please express your stance in a sentence or two on the "content token" debate.

Mable: The discussion on content tokenization is indeed complex. Overall, I agree with Jesse's proposition of the "tokenization of content" as a fundamental direction. However, it needs to be clear that not all content is suitable for tokenization. In fact, when a piece of content is tokenized and lacks market trading activity, it implies a lack of market-recognized value. Toly's concept of "digital slop" is insightful—it reminds us that not all on-chain information has trading value or needs pricing. However, Jesse's vision of "potentially millions of content tokens representing different information appearing daily in the future," I believe, does represent a possible development direction.

Edison: I agree with Mable's point of view. Jesse's core argument is that every piece of content has its value. But as Toly sarcastically pointed out, if this is just a gambling game, then let's face it honestly, instead of engaging in financial speculation disguised as "empowering content creators."

Liu Feng: I believe that currently these content tokens are essentially garbage.

WANG Chao: As a newcomer to this field, I just caught up on some background a couple of days ago. At the moment, I haven't fully grasped what they are hyping up, but based on my current understanding, I do not agree with Jesse's stance. I think these attempts are trying to analyze and package social behavior or content expression in a highly financialized, structured manner, which severely underestimates the backlash effect on the entire ecosystem after the introduction of financial leverage. From the current situation, I do not consider this a mature or effective solution.

Carl: I don't take sides. From an objective perspective, the key trigger point of this debate lies in the analysis of DelComplex analyst Sterling, who pointed out that about 80% of the content tokens on the current Pump.fun platform either end up losing liquidity or becoming worthless. This is actually of significant reference value, that Zora is essentially "old wine in a new bottle," and the business model has not fundamentally broken through the existing paradigm. I strongly agree with this statement. Although the proposition presented by Jesse that "all creative content has value" is worthy of recognition in itself, when specifically analyzing the asset attributes of the Zora and Pump.fun platforms, it is indeed difficult to distinguish them based on their inherent characteristics.

BlockBeats: Are the underlying logics of products like Zora, pump.fun, Trends.fun, and Friend Tech that enable "content-to-coin" the same? Are these different types of mechanisms? (Such as dual-token model, prediction markets, and one-post-one-coin bonding curve)

Edison: From an investment perspective, these types of products are fundamentally similar, all attempting to "monetize" or "financialize" content or social behavior. If we look back at the development trajectory of Friend.tech, it initially sought to achieve the "securitization" of social relationships, a goal that itself carries a certain level of seriousness. However, even so, most projects ultimately still cannot escape the fate of being short-lived.

The operation mechanism of Zora is to automatically tokenize user-generated content, but the problem lies in its lack of openness. Despite wearing the cloak of decentralization, at its core, it is more like a Web2 application simply grafting a token system. It requires users to fully migrate to a new platform, much like asking people to accept another Instagram, making the user migration cost too high. Apart from the token outer shell, it has not built a deeper value system.

In contrast, Trends.Fun chose a different path: it filters high-quality content from existing social platforms (currently mainly focusing on Twitter) and incentivizes users to discover, mine, and recommend potential high-quality content through a tokenization mechanism. In this process, the token actually plays a dual role: it is both an information filter and a value capture tool.

Overall, these platforms all have pronounced speculative attributes and are essentially PVP game structures. However, they each have different focuses: Zora emphasizes content nativeness, Friend.tech focuses on social relationship reconstruction, Pump.fun pursues emotional ignition, and Trends.Fun focuses on content discovery and repackaging. Although these differentiated attempts are heading in different directions, they are all still exploring new possibilities for content monetization.

Mable: You just mentioned that Zora is not open enough. Can you elaborate specifically on what "not open enough" means?

Edison: The so-called lack of openness is based on the core spirit of Web3—'composability.' Web3 has always advocated that each protocol or application should focus on doing one thing, and they can flexibly combine with each other. However, like Zora, including Farcaster we discussed before, they all try to establish a closed application ecosystem: from account registration, content writing, to finally content tokenization, the entire process must be completed within the same app.

This closed-loop architecture is more like the logic of Web2—it requires users to enter a new platform, start from scratch, which is a very high barrier for content creators or users. The cost is obvious: the amount of content on the Zora platform is far from comparable to Twitter or other open social platforms, and the content quality is limited, unable to support a more extensive and high-quality content ecosystem. If it opens data interfaces and supports cross-platform content access, it is possible to form a multi-ecosystem linkage like today's Facebook, Twitter, TikTok.

Liu Feng: I understand that you mentioned it's not open because Zora requires content to be generated by its platform and recorded on its chain. However, the reality is, currently, 99.99% of content is actually not on-chain.

Edison: Yes, it not only requires data to be put on the chain but also demands that users utilize its full account system to perform all operations within their own app. This is evidently a form of centralization, a logic reminiscent of Web2.

Liu Feng: However, from another perspective, this approach by Zora also ensures the "nativeness" of content — content generated within the platform ecosystem is truly able to "carry" this so-called content token. If content is merely linked from Web2 and lacks the original ownership relationship, content and tokens are actually disconnected.

Edison: The issue, though, is that content itself can be replicated. There has never been a piece of content that is truly "owned." You post something, and it can be mirrored or quoted on another platform at any time.

Liu Feng: However, in architectures like Mirror, the content is indeed put on the chain, granting the original author ownership. Trends by Mable also implements a similar approach.

Mable: For Trends content, such as tweets deleted by Peter Schiff or Elon Musk, we store them on Meta Pixel. It's not just a simple link but rather archiving the tweet itself. Trends creates mirrored versions of this content, ensuring that even if the original platform deletes it, it remains accessible on-chain.

You just mentioned "openness." I understand Edison's point as: "The creation, dissemination, and tokenization of content can actually be completely decoupled." They do not need to occur on the same platform. For example, content can originate on Twitter, spread through Twitter's social graph, and then be tokenized on Trends, where Trends archives and puts it on-chain. This decoupled structure actually aligns more with Web3's "composability" logic, maximizing the efficiency of each ecosystem in capturing value.

Edison: Yes, this is a typical idea of a compositional architecture in the former DeFi world.

BlockBeats: So, content token platforms don't really need to overly concern themselves with whether the content is "native." Instead, they should focus more on how to design mechanisms to identify and capture content worthy of tokenization. In fact, what truly matters is how to build a PVP game structure among content.

Edison: Exactly, the core issue is: how do you find high-quality content that is "worthy of being tokenized"? This is far more important than where the content was initially published.

BlockBeats: From "Tweet NFT" to Mirror, then to Friend.tech, and now to content tokens, it seems that the idea of "turning content into collectibles" has never been successful. Do people think it's a product logic issue? How can we find high-quality content suitable for tokenization?

WANG Chao: I strongly agree with what Edison said about "how to find good content being the most crucial." In the past, I wasn't actually interested in the concept of "content tokens." The reason is that I've never been able to figure out what it really means to financialize content. Does it truly create the outcome we desire? If it's just to generate hype once, a short-term price pump, then indeed some products have achieved that. But if we take a more idealistic, less speculative view, I've never understood the true value of these projects. My question is: what kind of asset is truly worth financializing? Where does the value of financialization lie?

This also leads to another point of consideration: when we discuss "content tokens," what are we really trading? Is it the content itself? Attention? Or something else? What behaviors does this mechanism incentivize? What consequences does it ultimately bring? These are questions that every platform needs to seriously consider. Personally, I think that if this financialization mechanism could ultimately filter out quality content and allow it to be disseminated, that would indeed be a meaningful thing, but can it really achieve this goal? I remain skeptical.

Carl: I think we need to recognize that "good content" also has an expiration date. For example, someone posts a tweet about a market prediction at a specific time, and it is later proven to be correct, this can be considered as long-term valuable content. But others like memes, GIFs, can go viral for a short period, they can also be considered "good content," but they are ephemeral. Therefore, when we talk about "content monetization," we must also realize that: some content is sustainable (long-lasting), while others are fleeting.

Currently, the practice of turning this content into tokens on the market is essentially still PVP. You post a meme, then issue a coin, others buy in, price pumps, and in the end, most tokens go to zero. This aligns with Sterling's previously quoted view, that these platforms are mostly just "old wine in new bottles," they may seem to have a new concept, but fundamentally, they are the same old speculative game.

Liu Feng: I would like to raise a relatively basic yet fundamental question. Just now, Chao mentioned that "we actually don't need to deliberately define content coins." I basically agree, but there's a point I think is worth further discussion: those hotly speculated Meme coins on Pump.fun, are they considered "content coins"? This is something I'd like to ask everyone: do we need to redefine the boundaries of "content coins" as well?

BlockBeats: This happens to be our third topic today. There seems to be a trend where creator economy platforms are being meme-fied, and meme launch platforms are being economy-ized. So, is today's content coin, like Meme, primarily driven by hype and attention? Where is the boundary between content coins and Meme coins?

Liu Feng: I think these are all excellent questions. I'd like to start with the topic of "Where is the boundary between Meme coins and content coins?" In a narrow sense, we roughly know what "content coins" are, but taking Pump.fun as an example, everyone has attached many narratives to it. Some people think it's a live streaming platform, while others call it a hot content creation platform, or even a news platform.

So here's the question: are the content tokens generated on Pump.fun considered "content coins"? The platform incentivizes users to create content, which is essentially a function of content coins; but if this content ultimately turns into merely a hype-driven Meme coin, does it still qualify as a content coin? This is the first question I want to clarify. The second question is: if the incentive mechanism of content tokens is solely based on attention, is it sustainable?

Mable: I'll respond starting from the previous questions. Just now, Professor WANG Chao asked a core question: "What kind of thing is valuable?" In fact, today we're discussing the concept of "value," which is completely different from our understanding in 2018 and 2019. Back then, we believed that a valuable token should either succeed or go to zero, and it should be hodled long term.

However, the essence of a token is actually a data container. It can carry a lot of content, or it can be extremely lightweight. I completely agree with what Carl just said: everything has a time stamp. If 99% of content is destined to perish, why should we expect 99% of tokens not to go to zero?

So, the value of a content coin depends on whether it has carried genuine attention, emotion, or consensus at a certain point in time. A piece of content that has no value even in the present, when minted into a token, will not attract any buyers, even if it's from Elon Musk.

Some people believe that individuals themselves are pricing the content, and they are not wrong. However, this is also a frontend issue for many content platforms. If everything relies on celebrities to drive engagement, then only a very small number of people's content will receive attention. However, on Trends, we have found that it is not only the content of "influencers" that experiences high-frequency trading. Some small account content can also be widely disseminated due to specific contexts, which is not heavily related to the creator's identity but rather to the content itself receiving "attention in a specific scenario."

So I really like the phrase: "Not all content are of equal value." Some content is just lousy and naturally will not be purchased, naturally will be eliminated by the market. I believe that market mechanisms can filter out content tokens that truly have a consensus, and this consensus is not necessarily "noble" or "correct," but it is authentic because only authentic consensus will bring funding.

Regarding Teacher Liu Feng's proposal of the "Difference Between Meme Coins and Content Coins," I have some personal understanding (which does not represent Trends' official position): Pump.fun is trying to turn content such as live stream clips into tradable assets, and this direction is not wrong. However, its current product design is still more inclined toward "trading over content," with its core being hype rather than building a social network.

If we trace back to the original Pump.fun, what it carried in its data container was abstract concepts and online culture. It had no background at all; it purely relied on community consensus for propagation. In comparison, on Trends, a previous article by Meow titled "Social Monies" went viral multiple times in different social graphs and contexts, constantly being rediscovered and disseminated, and its token value was consequently repriced multiple times. This indicates that content tokens can be reactivated with environmental changes.

This highlights a key difference: content tokens can be "combined and reused across contexts," while Meme is more like a "snapshot of abstract emotions." Of course, you can also think that the uniformity of Meme aids liquidity concentration, but if we approach it from the direction of "content tokens," it emphasizes the expression of content across different time and space and the ability to capture value. This is what I believe is the biggest difference between it and Meme coins.

BlockBeats: Just mentioned the "composability of content tokens," what does this specifically refer to? How should different content coins be combined?

Mable: Let me give you a random example (this is not a plan for any specific product, just my personal idea): For instance, if you want to create a token related to Vitalik, you can, of course, choose to tokenize directly, but another approach is: you can select some content coins from his history that have been frequently traded and always maintained a certain market value, package them into a "basket." This basket represents a collection of content from Vitalik that has been published in the past and market-validated as valuable. This may not necessarily represent Vitalik's "lifetime value," but it does represent a portion of the market consensus that has been "retained." Therefore, trading such a synthetic content token may be more valuable than directly trading a Vitalik security token.

Carl: It sounds a bit like bundling all of Vitalik's signature content coins into an ETF or Content Index Token, right?

Mable: That's one way to understand it. But I want to make it clear that if you really tokenize all of his content, some of it will definitely go to zero. I don't think every piece of content he posts will be traded or valued in the long term; only a part of it can "survive." And those that are selected by the market and retained are the ones that truly hold relatively long-term value. This value itself is relative, just more enduring compared to other more "short-lived" content.

BlockBeats: So, in simple terms, Content Coins are often tangible, pointing to specific content (such as a tweet or a video clip); while Meme Coins are more abstract, carrying an emotion or cultural consensus. Can we understand it this way?

Mable: Yes, at least early Meme Coins did take this form.

Edison: When I was podcasting with Professor Liu Feng and Jack before, I suddenly "realized" the logic of content tokens. My definition of Web3 is actually very simple: the ideal state of Web3 is "posting content as easily as updating a social media status," but from the moment that content is posted, it becomes a tradable asset.

If our blockchain infrastructure's performance is good enough and costs low enough, then theoretically, every piece of content published is not just the generation of information, but also the creation of an asset. On traditional social media, posting content only generates data that is almost impossible to price effectively and trade. However, in Web3, our content can be commoditized at a very low cost (even fractions of a cent), thus possessing liquidity and market pricing capability.

BlockBeats: So, your logic is: by "commoditizing" each piece of content, we can filter out the truly valuable part of the massive amount of content, is that right?

Edison: Exactly. You don't even need to care about the content in your social media feed; as long as the content someone posts is widely recognized at a certain point in time, it will naturally reflect its price. This commodification mechanism itself is a filter that helps us discover genuinely valuable content.

BlockBeats: This perspective is quite interesting. In the past, we used to measure "good content" based on its traffic performance and long-term reference value. But if we measure content as an asset, it is equivalent to adding a "price discovery" dimension, possibly even a whole new content discovery mechanism?

Edison: Exactly, this is also why I pay attention to Trends.fun. It truly shows me the potential and future space behind "content assetization."

WANG Chao: I think the current situation is actually quite integrated. In this field, there is an interesting phenomenon emerging— the boundary between Meme coins and content coins is becoming increasingly blurred. Take the Meme coins on Pump.fun, for example. They are very honest, straightforwardly admitting that they are merely symbols of attention, so these tokens operate 100% based on hype and attention. This purity has become their characteristic.

On the other hand, platforms like ZORA and Friend.Tech are trying to inject more substance into their tokens. For instance, Friend.Tech, upon closer analysis, has indeed built a value system, but this system is not very stable, and the value is hard to measure accurately. ZORA is currently rewarding creators and developers with tokens, which is the right direction. However, the attractiveness of these additional values is still not sufficient, fundamentally carrying strong Meme attributes.

Interestingly, now both sides are learning from each other. On the Meme coin side, Pump.fun is starting to position itself as a creative platform, aiming to create a more substantial narrative. On the content platform side, there is a particular envy of the Meme's virality and market heat. This two-way borrowing and integration are giving rise to some new gameplay. I think this trend is particularly worth observing because it reflects the market's search for a balance— wanting to maintain the viral power of Meme while also aiming to establish a more sustainable value foundation.

Liu Feng: I am pondering the essence of content value. Currently, it seems that whether it's a platform like Pump.fun (which doesn't truly focus on the content itself) or the current popular concept of "content tokens," they are essentially using content creation to drive traffic and incentive mechanisms.

And what the other group of people is now calling content tokens is also hoping that through the continuous process of creating content, they can drive traffic through content and achieve what was just discussed - this kind of threshold or short-term consensus. It seems all these can be seen now because consensus can be generated through traffic. So, in the end, everyone is still in the process of monetizing traffic and attention. It seems that, as of now, this is the only contradictory value.

The core logic of these two models is actually the same: they both attempt to form short-term consensus through traffic aggregation during the content creation process. The phenomenon currently observed is that only with sufficient traffic can this market consensus be generated. Therefore, the ultimate business logic seems to point in the same direction: the monetization of traffic operation and the monetization of user attention. From the current situation, this seems to have become the only path to realizing content value at present, also reflecting an inherent contradiction in the value creation process.

Mable: This is especially interesting. I found that some content has very low reading volume on Twitter, but trading is very active within private circles. This made me realize that the current consensus monetization method is undergoing subtle changes. In the past, we found it difficult to directly monetize all public domain and private domain traffic through a single "container," or to monetize across various domains through this "container."

Previously, our methods of monetizing traffic were very fragmented: public domain relied on page views, and private domain relied on subscriptions. But now, through the "container" of content tokens, for the first time, different channels of consensus have been consolidated into the same asset. Imagine, when you issue a content token: if the market recognizes the traffic value of this content, even if not everyone understands this relationship, as long as a part of the people accept this logic, this token can freely flow between the public and private domains.

This creates a new possibility: tokens are like a thread that can string together the consensus value scattered across various channels. Although many people still cannot understand the equation "high likes = high financial value," when some pioneers start to accept this logic, the token's contract address itself becomes the best dissemination medium.

The most ingenious part of this mechanism is that it is no longer limited by the traffic rules of a single platform, but allows value to be discovered, recognized, and transacted anywhere. Although this shift is still small, it does represent a completely new way of consolidating value.

Liu Feng: Similar to the value discovery process of so-called long-tail assets.

Edison: This actually touches on a fundamental difference between Web2 and Web3. In the Web2 era, traffic is the endpoint, and platforms pursue a pure attention economy. But Web3 has taken a crucial step forward—traffic is just the starting point, and the real endpoint is to form value consensus.

It's very clear with an example: some Web2 projects claim to have tens of millions of users, but if this traffic cannot be converted into lasting value recognition (e.g., no one is willing to pay or hold), the ultimate value will still be zero. Conversely, looking at certain NFT projects, the initial user base may not be large, but holders strongly believe in their value, and this strong consensus can actually support a lasting price.

So the innovation of Web3 lies in: it has established a value transfer mechanism from traffic to consensus. Traffic is just the sowing, while consensus is the reaping. And the creation of wealth is essentially a process of consensus aggregation. This explains why in the crypto world, a small community of true fans may be more valuable than a million-level mainstream audience.

Liu Feng: Based on this, can you analyze the situation Mable mentioned just now, for example, the so-called private-domain content token, what is the mindset of the trader?

Mable: What I meant earlier is that something in the public domain has a "leader" with a large buy-side, but it does not have much social media virality. However, he can take this content token or the content token he discovered and throw it into his own private-domain group.

Liu Feng: I see, so what you are saying is actually the monetization of the traffic of this leader.

Mable: Yes, it depends on how you define it; it's his private-domain influence.

BlockBeats: Base and Zora have indeed hit the mark in some aspects. Currently, the market still highly values traffic value, although content tokens are essentially more focused on aggregating private-domain value. The essence of content tokens is: first, you need to establish a small but highly acknowledged community that appreciates the content, with these core supporters forming the initial purchasing power base. However, it is undeniable that the attention economy and the traffic effect are still important driving forces in the current market.

Mable's data analysis on Base and Zora also confirms this point. The data shows that a significant transaction volume can be driven by content tokens alone. In comparison, the Solana ecosystem faces some challenges in its token issuance mechanism, such as high rental costs and issues with infrastructure fees like Metaplex. This also explains why Mable places such importance on traffic as a key metric.

Mable: It is necessary to clearly distinguish between the asset issuance quantity and actual traffic. In the current environment, especially when the Twitter platform is filled with a large amount of AI-generated content and automated replies (what we call "mouth-paced content"), it is difficult to accurately measure real traffic and influence. Even with promotional efforts, it is challenging to assess the actual impact, and this superficial prosperity often carries a false element.

The core point is this: large-scale asset issuance can indeed create more participation opportunities, which is a fundamental condition for achieving mass adoption. Taking Solana as an example, assuming a daily issuance of 50,000 tokens, with each deployment cost being 2-3 dollars, the total cost per day would reach 100,000 dollars. If the issuance were to be increased to 500,000, the cost pressure would increase significantly. In comparison, Solana's current cost structure remains relatively high, which is why previous derivative projects needed to invest a large amount of funds in developing automation tools (bots).

All the discussions are premised on this: for market mechanisms to effectively filter value, there must first be a sufficient foundational quantity. As for the Zora platform, the challenge it faces is this: if all content is limited to native Zora issuance, even if asset tokenization is achieved, the vast majority (possibly 99.99%) may lack real value. In contrast, the Twitter platform may only need to filter out 10% of high-quality content for tokenization to enable effective trading. In such a limited pool size, achieving value discovery indeed faces significant difficulties.

BlockBeats: So, the number of participants is also a crucial point.

Mable: I think so too. In a typical quality content ecosystem, 5% of people create, and 95% are readers. I (Trends) was discussing with an investor, and he pointed out that many creators with keen network perception but limited initial capital may discover hundreds of high-quality content pieces every day, achieving revenue through a conversion rate of 5% of high-quality content. This model is theoretically feasible. This illustrates the situation very well. However, when the issuance cost per piece reaches 2-3 dollars, the daily cost investment of 150 dollars for these individuals indeed constitutes a substantial threshold. This not only affects creativity but also to some extent restricts the diversified development of the ecosystem.

BlockBeats: Just now, Teacher Mable also mentioned that much of the content is AI-generated. So, AI-generated content, or content tokens, do they really have value?

WANG Chao: First of all, I believe some AI content indeed has value, but whether it retains value after being packaged into tokens depends on the overall system design. It is necessary to clearly distinguish that the value of the content itself and the value of content tokens are two different concepts. In fact, all data has certain asset attributes, even data stored on a personal computer. It's just that this attribute is usually weaker and difficult to standardize pricing. The current government and various institutions' promotion of "data entry" is a good example—they are attempting to convert data accumulated in traditional ways into standardized assets through a specific valuation mechanism, even though this data may not belong to the Web2 category at all.

In terms of the development direction of Web3, I believe that putting social network content and other information on-chain is not a problem in itself. However, there is a difference between putting it on-chain and packaging it into a financial asset, a financial asset that may have a high degree of speculation. I still have doubts about this. Putting information on-chain can indeed create many possibilities, but it does not necessarily have to be overly financialized. Current designs often focus too much on efficiency and liquidity, ignoring the complexity of the real world. While this exploratory direction is valuable, a theoretically perfect financialization solution faces various human challenges and mechanism pitfalls in reality. Whether it can truly be implemented and sustained remains a big question mark.

Regarding the view of the free market, I am skeptical. As a supporter of the free market, I believe that a completely free market mechanism may not necessarily produce ideal results as expected, although this possibility does exist. This is a supplementary view to the previous discussion.

I would like to take this opportunity to extend my thoughts. Today we are discussing content tokens, but looking at the development process of the crypto field—whether it's content tokens, DAOs, or other directions—a hidden core logic exists: we are trying to minimize human factors in the system, or minimize human characteristics. This concept pursues the creation of a theoretically almost perfect automated system through carefully designed mechanisms, maintained and executed by a network that runs perpetually. This design does demonstrate unique aesthetic value and practicality in some areas. However, I also begin to question whether this model is applicable in all scenarios. In fact, from the origin of the crypto movement—such as the vein of thought of cypherpunks—one can see the embryonic form of this idea. In the early days, I fully agreed with this theory, but after observing a large number of project practices, I began to doubt this absolutist design approach.

The essence of the design in the crypto field is fundamentally to marginalize human subjective value. Taking DAOs as an example, regardless of how the current implementation performs, the original design idea is to hope to build an organizational framework through consensus mechanisms and smart contracts in a highly abstract manner. This model attempts to replace the parts of traditional organizations that rely on human judgment and subjective decision-making with a standardized, programmable rule system. This design approach indeed has its innovativeness—it attempts to create a collaboration system that does not rely on individual subjective value judgments but is automatically executed by code rules. However, the question is whether this extreme abstraction can truly completely replace the complex interactions and value judgments in human organizations.

However, this design approach actually overlooks a key element, the listener or participant, the subjectivity of the participant. What we need to consider is, in these mechanized systems, where do human subjectivity, thought judgments, and social relationships stand? Besides mechanically executing contract terms, should there still be space for other dimensions of participation? In the operation of human organizations and societies in reality, these non-mechanical interactive elements are crucial. Although in principle, Bitcoin's successful proof has indeed been able to create an efficient system through streamlined design and by eliminating certain "problem" factors, it is difficult to replicate this model in all areas. Most projects are basically impossible to succeed by simply removing "human factors."

For example, take the once-trending Play-to-Earn concept, which essentially gamified entertainment labor. In this process, the most core elements of gaming were systematically stripped away: the joy of resource exploration, the sense of growth from challenges, and other intrinsic values were marginalized, replaced by the mechanical and repetitive gold-farming behavior whose sole purpose is profit. This design not only failed to liberate players but had the opposite effect: it did not attract true gamers but mainly attracted professional gold farmers from places like the Philippines. This reveals a deep-seated issue: when we overly emphasize economic incentives, we may actually undermine the intrinsic value of the activity itself.

This model can indeed be packaged as a high-minded concept, such as touted as "decentralized work opportunities," where one can earn $5 or more daily from home. From a narrow perspective, allowing certain laborers in some regions to earn an extra $5 a day is not necessarily a bad thing. However, this cannot mask the underlying design issues. This also does not explain some fundamental design problems that arise, such as the current obsession with liquidity in the crypto space, including the design of certain content tokens, all facing similar dilemmas. When the tokenization process is overly simplified, it often strips away many elements that we may not be able to clearly perceive but are actually crucial. While I cannot currently find the most appropriate wording, the core issue is: does this simplification disrupt the integrity of the original system?

The current obsession with liquidity in the crypto space, including the design of certain content tokens, all faces similar dilemmas. When the tokenization process is overly simplified, it often strips away many elements that we may not be able to clearly perceive but are actually crucial. While I cannot currently find the most appropriate wording, the core issue is: does this simplification disrupt the integrity of the original system?

BlockBeats: Let me summarize; when the financial logic of cryptocurrency combines with fields like social and cultural content, it often leads to a potential problem: the spiritual and creative traits of human creation are easily marginalized. Taking content tokens as an example, the current mainstream practice tends to emphasize large-scale issuance and market selection mechanisms—this bears striking resemblance to AI content production: generating a massive amount of content and relying on algorithms and market competition to filter out a few successful cases. In this process, human subjectivity and creative participation are greatly weakened, potentially resulting in a substantial disconnect between content tokens and their creators.

Liu Feng: Teacher WANG Chao's perspective indeed elevates the discussion to a new dimension. Our previous conversations mainly focused on tangibles such as tradability and financialization, somewhat simplifying content tokens into a kind of game. This discussion is based on an underlying assumption: the free market can discover value through consensus. However, reality is evidently more complex. Yet there are many things in the world that are not tradable.

Human emotion-driven content creation has its uniqueness. Many creations and the emotional commonality imparted to content are treasured because of emotion. However, if we try to represent this through transactions, it cannot be accurately achieved. Many precious emotional connections and resonances cannot be easily quantified or traded; sometimes, the most sincere content value often lies within specific relationships.

The excessive financialization development can indeed lead to value nihilism. When we simplify everything into transactional objects, we may actually lose those most essential, difficult-to-trade value dimensions. Most people's level of creativity is not necessarily very high, but one reason why it is currently challenging to replace with AI-generated content is consensus and sentiment. The predicament of AI content tokens lies here: even if the technology can simulate a consensus mechanism, the lack of human emotional-based consensus fundamentally differentiates its value. This raises a deeper question: should we reserve space for values that cannot be financialized but are crucial to humanity? Especially in a field like content creation that is inherently rich in humanistic traits.

Indeed, there are many valuable things that AI cannot do, such as content that requires genuine emotion and life experiences. However, conversely, there are things AI can do that humans find difficult. The most direct example is, for instance, monitoring whale transactions, programmatic content that if turned into a tradable product, is undoubtedly much stronger than what we can manually do. So the key still lies in demand—AI and humans each have their strengths, and there is no need to be strictly one or the other.

The real difference lies not in who created it but in what it can actually create, under certain circumstances, when everyone feels it can be used, and truly can gain some emotional touch, sentiment, or knowledge, or even information acquisition. As long as it can touch on something, it can. So I think what Edison said is quite right: do not discriminate against AI creation, but also do not underestimate the unique value of human creation.

Edison: A ready-made example: Everyone is chasing after AGI now, right? If one day AGI truly emerges and speaks its first words, that moment will be very precious—I would definitely be willing to exchange it for Bitcoin. So, who creates the content is not important, but whether the content itself can touch some people.

Carl: I often pay attention to and trade tokens related to AI themes, so I should have some say. I think we cannot completely negate their value, but there is an interesting piece of data—if you look at CoinGecko, all AI plus blockchain concept projects from 2023 to 2024, the average lifecycle is only 5 to 6 months. In essence, it depends on whether you can sustain community interest and token value. These AI content tokens are indeed prone to hype. Previously, in a bull market, many tokens could increase by 30-40 times in two to three days and skyrocket to 100 times within a week. However, projects that can truly survive quickly give practical uses to the token once the market capitalization rises. They aim to "squeeze" the token, such as exchanging tokens for AI tools to generate content; rewarding contributors who provide high-quality data or AI models; allowing token holders to vote on the direction of AI development, and so on. Ocean Protocol is a good example of this; its tokenomics revolve around designing a data marketplace. Therefore, I believe we should not confine our thinking to whether AI content tokens have value; our thinking can be more expansive, focusing on how to add more value to tokens.

It's not just about the AI token, any project should think about how to increase the token's practical use case. In this highly competitive and low-barrier industry, to survive and even lead, the key is to find a long-term sustainable development direction. Projects that have survived from last year until now have seized the opportunity, while actively working on practical matters, giving the token real value and utility.

Mable: In the future, half of social media content may be generated by AI. By then, the source of content (human or AI) may no longer be so important. If we are simply discussing this issue, I think our generation may take a more serious view on financialization, while the younger generation (especially those born after 2005) has a completely different understanding of content financialization. For them, using tokens to express attitudes is as natural as us using likes or shares. This is not a traditional investment behavior but a new way of expressing value.

This trend is based on the fact that the cost of content production has approached zero. In an environment of abundant information, people need to rely on scarcity to form consensus. Tokens precisely provide this scarcity carrier. This does not mean that all content will become mechanized—like podcasts, a medium that emphasizes human connection. Although data transmission efficiency is low, this "inefficiency" brings about a unique sense of intimacy. I don't think this is contradictory; not everything has been condensed into some form of labor or something impersonal.

Of course, on the other hand, there are some media that do not emphasize efficiency, such as podcasts. The human vocal cords can only transmit 10 BYTES of information data per second. Conversations are slow-paced, and when listening to a podcast, often what you are listening to is your interaction and feelings with that person. So, it's not just about the content itself; it lacks strong transactional attributes and may have a sense of possession.

Going back to the previous topic, the key is that we may be taking financialization too seriously. When a token serves as a data container, switching from one content token to another is essentially an exchange of information and value. This includes, for example, GameFi; we cannot simply say that only users engaging in gold farming participate. Some may argue that with financialization, it can be better regulated, which is also a normal thing. I often ponder why young users participate in projects like xxx.Fun (such as Pump.fun, Trends.fun); they may not be purely for speculation, but rather to find new ways of expression. This intergenerational cognitive difference may be the future trend that we need to understand.

Liu Feng: I would like to ask further, if according to your explanation, this "container" containing content tokens, or the types of content it can hold, may not be as diverse and colorful as we think.

Mable: You can't say that. You can mint freely, but I don't believe everyone will frantically trade like a podcast. However, you can also mint a coin, some people are willing to buy, and some can hold. That's what I mean.

Liu Feng: But theoretically, it's not a good form of this kind of support, isn't it the simple, brutal view that is the best?

Mable: It's like Short form content—short and refined. I think it's definitely more suitable.

Liu Feng: In the next ten years, the form and mode of content will undergo earth-shaking changes. Just as ten years ago we couldn't imagine short videos would become mainstream media, platforms like TikTok have completely reshaped the content industry. When the medium of communication undergoes a transformation, not only will the form of content change, but even the way we discuss the value of content will be completely different. In fact, we should step out of the present thinking framework to look at this issue. The forms of content and modes of communication that we understand today may be completely overturned in the future. If tokens really become the mainstream content carrier, then the game rules of the entire content industry will be rewritten. Just as who could have imagined back then that short videos would replace text and images as the main content form?

Edison: Yes, it is actually foreseeable. With the rapid development of AI technology, the speed of content production and data generation will grow exponentially—maybe 100 times, 1000 times, or even more than now. The traditional "publish-like" model will be completely overturned, and the productivity of these past-generation actions will be directly amplified, leading to an unprecedented data explosion on the whole Internet. But the key point is this: the total amount of human attention is constant, and there are only 24 hours in a day. This brings about a fundamental contradiction—when content supply expands infinitely while the demand side (user attention) remains constant, the game rules of the entire content industry will inevitably be reshaped. As mentioned by Liu, the future methods of content production and dissemination will require entirely new solutions.

Liu Feng: Everything is changing. Think about how we used to express our likes and dislikes of content decades ago: if we felt a program was not good, we would simply turn off the TV; if the content was not good, we would throw away the newspaper; if we liked it, we would share it with friends. In the Internet age, liking and sharing links have become new ways of interaction. In the future, buying and selling behavior itself may become our most direct feedback and way of interacting with content.

Perhaps we can consider a different perspective: as mentioned by Professor WANG Chao, content forms that cannot be carried by content tokens and are not suitable for financialized dissemination will instead become more precious in the real world. It is precisely because they do not fit into this new system that these seemingly "outdated" forms of interaction retain the most pure value core.

In this new form, financialization will become the underlying logic - this is the essence of this field. Two modes will coexist: on one side, valuable humanistic interactions that cannot be tokenized, and on the other side, a thoroughly financialized new content ecosystem. This may be the most interesting part of the future.

BlockBeats: Lastly, let's discuss a question that everyone is particularly concerned about, which is whether content tokens are really going to revive. What would be the necessary conditions for its revival?

Mable: Today I posted a tweet that was originally intended to spark a discussion within the Solana team about the importance of performance. Although previous discussions about low latency were somewhat joking or speculative, or people thought that a high-performance public chain would be needed in the future, I believe a true global smart contract settlement layer must achieve high performance. The industry is already very mature in terms of value exchange mechanisms (although not perfect), and the next key breakthrough is: how to reduce the entry barrier through performance improvements, allowing more people to join this ecosystem. This, in my view, is the most important direction.

Liu Feng: I definitely used to hate this kind of financialization, this way of guiding and disseminating content, I found it very annoying before. But last time when chatting with Edison, he gave me a real moment of enlightenment, why are we thinking so much? In the future, we may be able to issue billions of tokens every day, each token serving a specific purpose. This is the real breakthrough - or what is now popularly called "emergence." Just like AI suddenly undergoes a qualitative change in a quantitative process, at that time, completely new forms of content, modes of dissemination, and token use cases will naturally emerge.

We should boldly imagine such scenarios, accept situations that break everyone's imagination: in the future, a completely disruptive content ecosystem may emerge. Looking back at the development of the Internet over the past 30 years, during the early 2000s, the era when QQ was most popular, journalists were reporting on how QQ could monetize its members, whether QQ dolls could outsell Disney in the future, the most cutting-edge business imagination was just selling memberships and QQ dolls, who could have foreseen today's boom in short videos and live e-commerce? So now we need to break through the limits of our thinking more than ever and bravely envision those seemingly fantastical possibilities. Just as no one could accurately predict the form of mobile Internet 20 years ago, the future of the content token economy will also surely exceed the boundaries of our current imagination.

BlockBeats: So Teacher Liu's most fundamental idea is the same as Teacher Mable's. So if we say the content right now is too much rubbish, it means that what we have posted is not enough, and we need more high-quality, more content.

Liu Feng: But there may be more rubbish in the future, but it doesn't matter. There can also be treasures found in rubbish, so there is no need to be particularly fixated on this.

Mable: But isn't this mechanism just a mechanism for filtering out rubbish?

Liu Feng: It's also possible to filter out that it's all rubbish.

Edison: At the current stage of our industry, the mission of Web3 is actually very clear: after the current meme craze, maybe new assets will break through from the current daily issuance of 50,000 to 5 million or even 50 million. Just like the frenzy around Meme coins has shown, when asset creation reaches this level, true mass adoption and ecosystem innovation will naturally emerge.

WANG Chao: My views align with the opinions of the previous teachers. Although my discussion just now was more from a somewhat negative, or critical perspective, my support for innovative exploration has always been strong. I believe that any emerging thing needs to be verified through practice, and there is no project that can be perfectly designed at the initial stage— all successful entrepreneurial cases gradually mature through continuous iteration. Therefore, I have always believed that such innovative attempts are particularly worthy of encouragement. Personally, although I am no longer directly involved in project operations, as an investor, I often invest in some projects that seem a bit "strange," or even projects whose low success rate is clearly perceptible. One of my many considerations is that I think innovative attempts themselves are worthy of encouragement; second, regardless of success or failure, this process is particularly valuable. Therefore, I sincerely hope to see more teams exploring and attempting in this field.

Carl: I fully agree with this point of view— the experience of failure also has important value. It can also be applied in the investment field. When investing, there are also various types of targets. Many times, investment is in the team or founder, to see if they are passionate; or it is in the race track, to see if it is innovative enough. There are also various forms of investment, whether it is directly investing in startups or buying tokens, essentially all ways to participate in the market. However, to ensure the continuous development of the content track, there are still many key issues that need to be further explored.

In 2021, I also personally dabbled in the related field (let's not talk about specific failed cases for now), from which I deeply realized that sustainability is the core of whether a Meme or Content Token can survive in the long term. How to spark market hype and sustain it is a highly challenging puzzle.

Furthermore, I hope to see more exploration of the content ecosystem, such as incentive mechanisms: how to balance token rewards to incentivize content creators while attracting investors; the balance between speculation and investment: how to distinguish between short-term speculators and long-term supporters and coordinate the interests of both parties?

The content track is indeed highly challenging. I have personally tried and experienced failure, understanding the difficulties involved—sustainability of community operations is crucial, while also considering external factors such as market cycles. Finally, I hope to see more innovative models emerge in this field, rather than simple replication of existing platforms (such as some "content creation-token issuance-on Curve-community self-destruction" routines). Content tokens are still a relatively new concept that requires more mature mechanisms to drive their development.

BlockBeats: Today, we delved deep into the theme of "The Value of Content Tokens" and further expanded the discussion to analyze AI tokens and the future form of content consumption. This discussion covered multidimensional thinking with considerable depth and breadth.

Here, I would like to provide a brief summary: the current question of "whether content tokens have value" may itself be somewhat of a misconception. At this stage, the key is to create richer content assets to attract more participants. Through large-scale practice, we will have the opportunity to identify forms of content assets that may generate value in the future—these forms may exceed our current imagination.

Additionally, I would like to emphasize a key point that the audience should pay attention to: cultivating network sensitivity is crucial. With the continuous emergence of more diverse content assets, it is recommended that everyone draw inspiration from the mindset of Meme investing, deeply consider how to establish broader consensus, or explore other innovative paths.

Due to time constraints, we regretfully need to end today's space. Once again, thank you to all the guests for participating in the discussion today, and thank you to all the listeners for accompanying us. Until next time in space.

Space Link: https://x.com/i/spaces/1vAxRDOWWqkGl

Welcome to join the official BlockBeats community:

Telegram Subscription Group: https://t.me/theblockbeats

Telegram Discussion Group: https://t.me/BlockBeats_App

Official Twitter Account: https://twitter.com/BlockBeatsAsia

Forum

Forum OPRR

OPRR Finance

Finance

Specials

Specials

On-chain Eco

On-chain Eco

Entry

Entry

Podcasts

Podcasts

Activities

Activities